The frustrating thing about robotics is the amount of time you have to spend on problems that aren’t really to do with robotics at all. The Autonomous Deep Learning Robot comes with OpenCV4Tegra, a specially accelerated version of the OpenCV vision library. It comes with Caffe, a neural network tool that allows you to do pretty good object recognition. It also comes with Robot Operating System (ROS).

So how hard should it be to make these work together and get your robot to recognise the objects that it sees?

HARD!

There’s a nice package called ros_caffe that does just what you want: it reads the camera feed and publishes a ROS topic with a list of the top five “classifications” Caffe has made. The problem is getting the damn thing to compile. I’ll get to that in a moment, but first I’ll just take a moment to vent.

I first came across Linux around 1994 when an excitable friend gave me a pile of 3.5″ floppy disks with an early distribution. It took around a day to install, compile the kernel and the rest of it. My conclusion at the time was that open source was for people with plenty of time on their hands. I use a lot of open source these days, but my original conclusion hasn’t changed. I would happily pay $200 for ROS if I it meant I could avoid the sort of problems I’ve had getting this simple module to compile.

The rest of this post is for anyone stuck with the same problem, which is probably a small number. But if you are one of those people, you’ll end up with a robot than can really “see”.

First get Caffe to work by following my “missing instructions” for setting up your Autonomous Deep Learning Robot. Now, set up a catkin workspace if you haven’t already done so and download ros_caffe:

cd catkin_ws/src git clone https://github.com/tzutalin/ros_caffe.git cd .. catkin_make

You’ll probably see the link errors I saw, as ROS attempts to link the new module with version 2.4.8 of OpenCV. The problem is that the installed version of OpenCV is 2.4.12.2 not 2.4.8 and ROS seems to be hardwired to link to 2.4.8.

After a lot of hair-pulling and searches, I found the culprits.

cd /opt/ros/ grep -R libopencv_

Here they are:

indigo/share/pano_core/cmake/pano_coreConfig.cmake indigo/share/cv_bridge/cmake/cv_bridgeConfig.cmake indigo/share/compressed_image_transport/cmake/compressed_image_transportConfig.cmake indigo/share/image_proc/cmake/image_procConfig.cmake indigo/share/pano_py/cmake/pano_pyConfig.cmake indigo/share/image_geometry/cmake/image_geometryConfig.cmake indigo/lib/pkgconfig/cv_bridge.pc indigo/lib/pkgconfig/pano_core.pc indigo/lib/pkgconfig/compressed_image_transport.pc indigo/lib/pkgconfig/pano_py.pc indigo/lib/pkgconfig/image_geometry.pc indigo/lib/pkgconfig/image_proc.pc

These all reference non-existent 2.4.8 libraries. I haven’t found a better way of proceeding than to manually replace all references to

/usr/lib/arm-linux-gnueabihf/libopencv-XXX.so.2.4.8

with

/usr/lib/libopencv-XXX.so

Also completely remove references to libopencv_ocl.so

Insane. Please tell me if you find a better way. [UPDATE – I’ve had some suggestions from ROS experts that you might want to try instead]

Once you’ve done that, edit catkin_ws/src/ros_caffe/CMakeFiles.txt and change the line

target_link_libraries(ros_caffe_test ${catkin_LIBRARIES} ${OpenCV_LIBRARIES} caffe glog)

to

target_link_libraries(ros_caffe_test ${catkin_LIBRARIES} ${OpenCV_LIBRARIES} libcaffe-nv.so glog)

Then go to catkin_ws again and recompile

catkin_make

and it should do so without errors. If you get an error, it’s possible I’ve missed a step in which case ask me in the comments.

Now the reward! Open up five terminal windows to the robot and do one of the following commands in each one:

roslaunch turtlebot_bringup minimal.launch roslaunch turtlebot_bringup 3dsensor.launch roslaunch turtlebot_teleop keyboard_teleop.launch rosrun ros_caffe ros_caffe_test rostopic echo /caffe_ret

Now drive your robot around using the keyboard (make sure the window with keyboard_teleop.launch is in focus). Look at the window with rostopic echo /caffe_ret in it and you should see a stream of samples along these lines:

data: [0.832006 - n04554684 washer, automatic washer, washing machine] [0.0191289 - n03691459 loudspeaker, speaker, speaker unit, loudspeaker system, speaker system] [0.0161815 - n03976467 Polaroid camera, Polaroid Land camera] [0.0135932 - n04447861 toilet seat] [0.0115129 - n04125021 safe]

--- data: [0.839569 - n04554684 washer, automatic washer, washing machine] [0.0211603 - n03691459 loudspeaker, speaker, speaker unit, loudspeaker system, speaker system] [0.012341 - n03976467 Polaroid camera, Polaroid Land camera] [0.0117927 - n04447861 toilet seat] [0.0110748 - n04125021 safe]

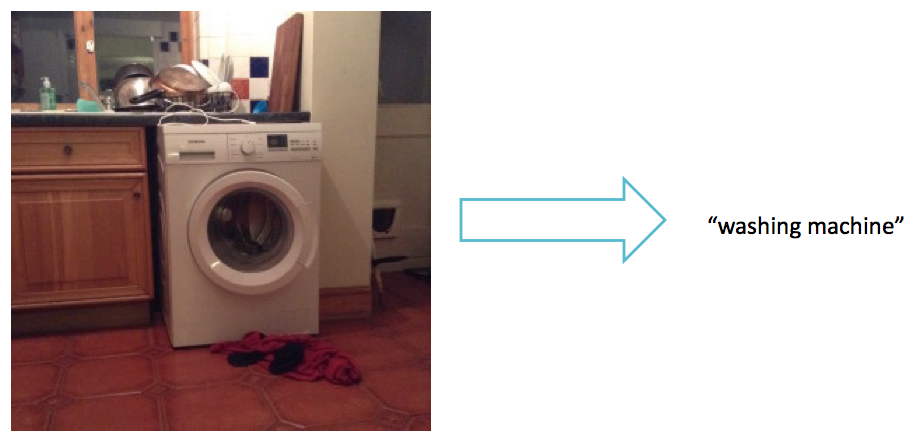

And yes, that is from my robot, who is staring fixedly at the washing machine in the kitchen. The top line of each sample is the most probable hit, so the robot interprets the image as a washing machine with 83% probability. The other guesses are much less likely, although I guess it does like a toilet seat from a certain angle (only 1.1% probability).

converted to the string “[0.839569 – n04554684 washer, automatic washer, washing machine]“