“You know, farming looks mighty easy when your plow is a pencil, and you’re a thousand miles from the corn field.” – Dwight D. Eisenhower

The day before, the Vegebot’s end effector had exploded on first contact with a lettuce, scattering acrylic shards across the lettuce lanes and rendering the crop in that corner of the field unsaleable. Now, under a dark grey sky and light rain, the wind was blowing hard, rocking the overhead camera back and forth. Confused, the mechanical arm lunged repeatedly, missing its targets. It had not been a good week. Then, with a peal of thunder, a bolt of lightning struck a nearby tree. The author and collaborators were stranded in the middle of a flat, desolate field, next to a large, malfunctioning metal robot.

The Vegebot project began in 2016 with the goal of building the world’s first lettuce harvesting robot. It was a collaboration between the Bio-Inspired Robotics Lab (BIRL) at the University of Cambridge and G Growers, a major UK farming group. The project formed the basis of my PhD and I worked hard on it before and during the pandemic. I’d like to share a few of the lessons learned, for others entering the surprisingly difficult world of agricultural robotics.

Development Methodology

The accompanying video gives an overview of the robot’s development, which had a particular methodology:

Development in the field. Sometimes there’s a reluctance on the part of researchers to get their hands dirty, and a preference to stay as long as possible in the nice comfortable lab. In the case of agricultural robotics, this is unwise. Rain, wind, bumpy ground, variable lighting conditions profoundly condition the robot’s design. The lab is nothing, repeat nothing, like life in the field. We worked in the field as often as possible, sometimes multiple times per week. You need to get your hands dirty.

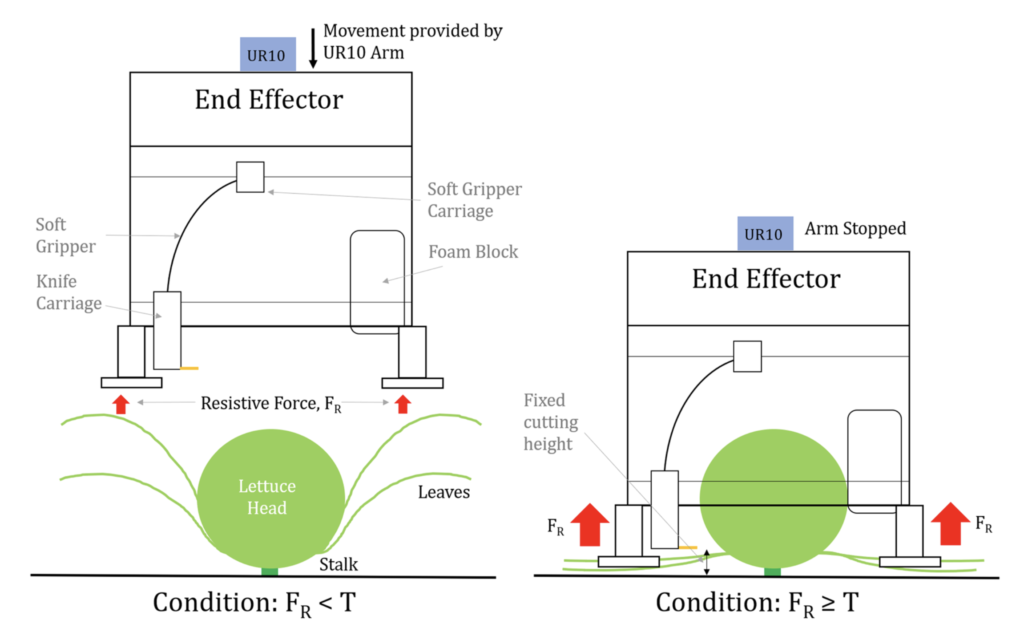

Build and test, rather than design. As the video shows, a core problem was how to effectively cut the lettuce stalk. It’s dense, like hacking through a cucumber. Rather than making elaborate plans or designs, the team built quick and dirty prototypes of a stalk cutter, trying out different form factors and actuator systems: linear electric, rotary electric and linear pneumatic (see the video). The lettuce was initially held in place by a plastic bucket from Poundland. The linear pneumatic actuator had a good force-velocity profile, and was the only solution that reliably cut; it became the basis of all future iterations.

One problem at a time. Once the core cutting mechanism was identified, the rest of the end effector development was a series of rapid, field-tested iterations, each one solving the next remaining major problem. Early versions had the actuators in line with the blade and next to the ground; these would get stuck on neighbouring lettuces. So we raised the actuators off the ground and tried various systems of transmission (struts, timing belt) to drive the blade. This created a robustness problem, which led to a solid, stainless steel end effector. This solved robustness, but created a weight problem. And so on and so on. The point is that iterations were fast, immediately tested in the field and solved one problem each time. A similar process was followed with the software.

Behaviour separation

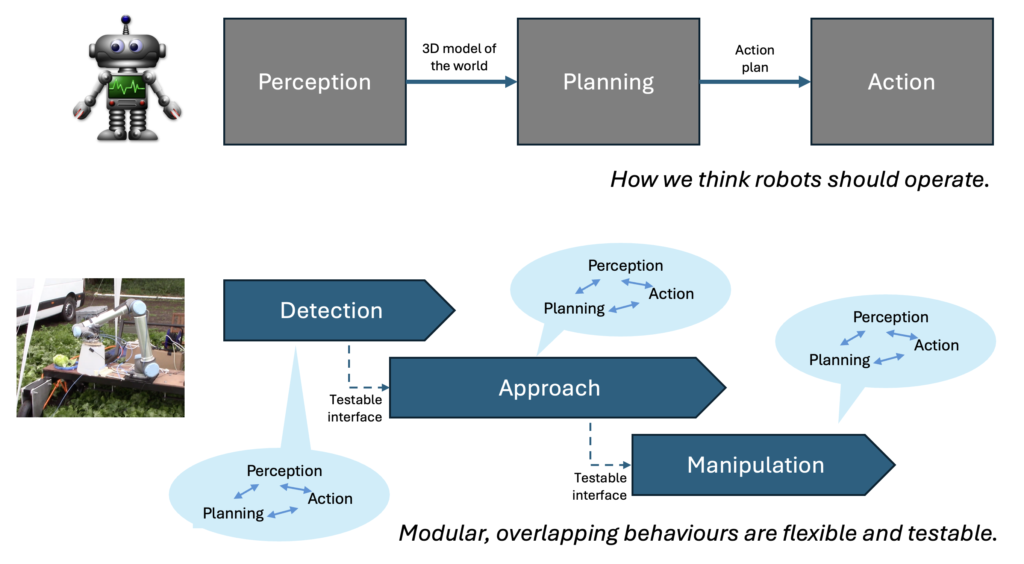

The classic way of thinking about robot architecture is as a perception-planning-action pipeline. We divided the work up in slightly different chunks, which corresponded to behaviours:

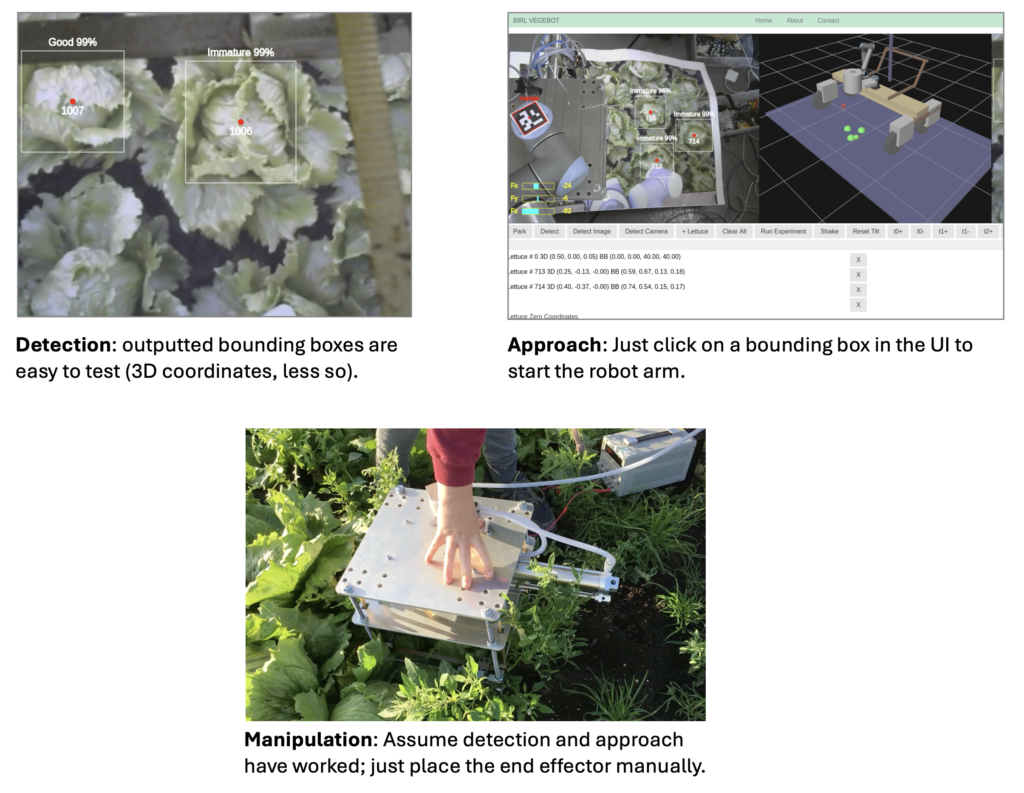

- Detection (find the lettuces and output their 2D screen coordinates)

- Approach (move the end effector to envelop the target lettuce)

- Manipulation (grasp the lettuce and cut the stalk).

Each of these behaviours potentially has its own perception-plan-action loop. For some crops, detection might involve pushing aside leaves that obstruct the view; manipulation likely involves real-time sensing. Importantly, each behaviour can be dealt with by a different team or engineer: detection (Julia), approach (Simon), manipulation (Josie), and can be tested pretty much independently.

The interface between the behaviours is key. The reader may be puzzled as to why detection should output 2D lettuce coordinates and not inferred 3D positions. A strong motivation for this is testing. By sticking to screen coordinates, you can visually inspect the output of the detection behaviour and immediately know if the bounding boxes are in the correct place. You can also independently test the approach behaviour by simply clicking on lettuces in the UI’s video feed and see if the end effector moves correctly to them. Using 3D coordinates, which are likely inaccurate anyway, would have made testing the two behaviours much more complicated. Manipulation was tested independently of the first two behaviours by manually placing the end effector over the lettuce and triggering the blade. In this project, manipulation required almost zero software.

The Approach Behaviour

As well as the overall software infrastructure, my principal task was to solve approach. As a naive roboticist starting my PhD, I assumed that the robot should first figure out the 3D position of the lettuces, then just move the end effector there with position control. Would that life were that simple.

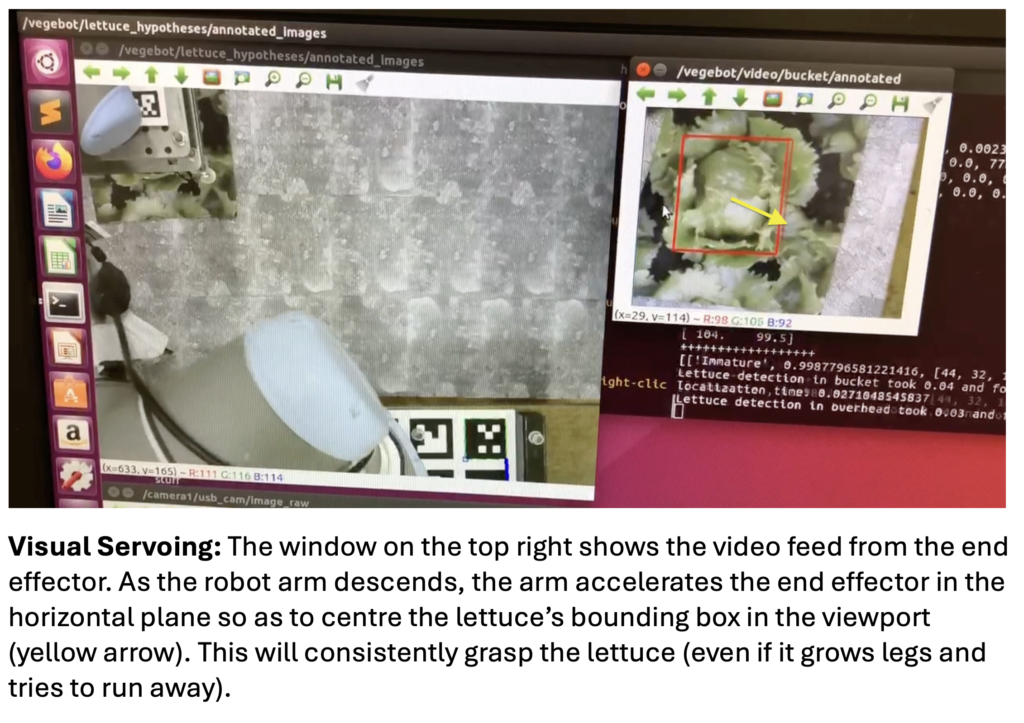

Inferring an accurate 3D location is hard at the best of times; try doing it in a field when it’s raining and the wind is buffeting your overhead camera support. I found myself constantly recalibrating the robot’s vision system after each jolt or bump. I soon switched to visual servoing, a form of velocity control, using the detected 2D bounding box around the lettuce to control the movement of the robot arm. The end effector had an embedded webcam, so by keeping the lettuce bounding box centred in the viewport during approach, it would always reach its target without the need for any sort of calibration. It was slower, but very robust.

Force feedback from the lettuce’s leaves and the underlying earth gave the signal of when to stop descending. Again, this avoided the need for accurate estimation of the ground position.

I later experimented with other methods to improve the speed of approach. By initially using visual servoing, the robot could gather a dataset that mapped from a 2D bounding box to the final 3D robot arm position. A simple neural network trained with PyTorch learned this mapping and the robot could then move the end effector to the target directly. Why a neural network – isn’t this just a linear function that can be calculated with conventional methods? Yes, until reality becomes non-linear, for example if your camera comes loose and gets affected by gravity when the platform tilts. By using a neural network the robot can construct its own implicit “body image”. If the body shape changes (perhaps the robot gets dented) and the end effector starts missing its target, the approach behaviour can temporarily fall back to the slower, more robust visual servoing and gather a revised dataset.

The final control method combined both options: an initial, learned, open-loop ballistic movement to quickly get the end effector into the right ballpark position (as predicted by the neural network), followed by a short, feedback-driven movement (visual servoing) to get an exact grip. This is pretty similar to how two-stage human reaching and grasping appears to work.

Takeaway: in an unstructured, unpredictable environment, avoid building detailed 3D models of the world to guide your movement; they’re always wrong. Incorporate feedback and learn shortcuts for speed.

It’s Hard

There’s one more problem: the seasons. Having made a big deal above of doing as much as possible in the field, agritech’s dirty little secret is that the field isn’t available for much of the year. What do you do in the winter? That’s when you focus on software, simulation (maybe) and dataset processing. And get ready to move as quickly as possible come the spring.

Robotics is astonishingly hard, even for the technically sophisticated who encounter it for the first time. There’s something about the real physical nature of the world that defies the most sophisticated algorithm. Reality is deeply uncooperative.

Agricultural robotics makes normal robotics look like a walk in the park.

With thanks to Josie Hughes, Julia Cai, Fumiya Iida and the many others who worked on the project. A shorter version of this post appeared on the RobotLux website.