Rodney Brooks took the stage at the 2016 edition of South by South West for an hour long interview. The founder of two world class robotics companies, iRobot and Rethink Robotics and emeritus professor at MIT, he had every right to feel satisfied at this late stage in his career. Yet the air of disappointment in the interview was unmistakeable. “Not much progress was made,” he says at around 35:15 into the video, with the air of Peter Fonda saying “We blew it”. Back in the 1980s Brooks started a revolution in robotics with something called the subsumption architecture, a revolution that has seemingly petered out. This is the story of that revolution, of the eccentrics behind it and whether they really failed, or created the future of robotics.

I should warn the reader at the outset that I’m a fan of forgotten technologies. From the days my high school chemistry teacher waxed lyrical about the delights of phlogiston (a non-existent chemical substance used in the 19th century to explain combustion), via an obsession with neural networks in the 1980s (a field that lay fallow for almost 30 years) through to holding the torch for Virtual Reality throughout the 1990s (I characteristically lost interest as soon as it became mainstream again) and today devouring books from the 1950s on Cybernetics, I love digging into ideas that the world has left behind. I’m attracted to the offbeat and fascinated by past orthodoxies. So bear that in mind as we proceed.

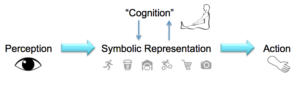

The Subsumption Architecture used to be the future. In 1985, a rebellious young researcher named Rodney Brooks published the innocently titled paper, “A Robust Layered Control System for a Mobile Robot”, basically implying that the history of Artificial Intelligence research up to that point was bunk. Till then it was assumed that the job of AI was to derive and manipulate a high-level symbolic representation of the world and use it to decide on actions to be taken. In a robot’s brain, the world might be represented thus:

The Subsumption Architecture used to be the future. In 1985, a rebellious young researcher named Rodney Brooks published the innocently titled paper, “A Robust Layered Control System for a Mobile Robot”, basically implying that the history of Artificial Intelligence research up to that point was bunk. Till then it was assumed that the job of AI was to derive and manipulate a high-level symbolic representation of the world and use it to decide on actions to be taken. In a robot’s brain, the world might be represented thus:

The Door is locked. The Key is on the Table.

By storing and interpreting these symbolic nouns and predicates, the AI could be said to “understand” the world. Consulting a stored database of “knowledge”, it could then figure out that picking up the key might be a good idea. How this representation would be constructed from a raw video camera feed, or how the robot would coordinate its motors to actually pick up the key and unlock the door were mere implementation details to be left for later. The real action was clearly in parsing sentences like the two above, because that’s what humans do. Right?

It turns out that parsing symbols is relatively easy while those implementation details are colossally hard and would still be flummoxing engineers thirty years later. And it was not at all clear that that’s what was going on in the head of somebody who found their door to be locked.

Brooks trashed all of this. Whereas the AI establishment saw the following pipeline:

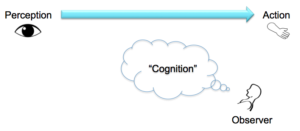

He saw simply:

with the symbolic representation and “cognition” existing only in the mind of an observer. Perception led directly to action in animals and humans, without passing through representation. We carried no 3D model of the world, no symbolic representation and no map. The whole of AI research was advancing down a dead-end street. And he’d built a robot to prove it.

This robot could navigate the office, avoiding obstacles. You could wander into its path and the machine would pause and move around you. This sounds trivial but is still hard to get working today and was quite beyond anything AI could do in 1986. Rather than a series of functional modules between perception and action

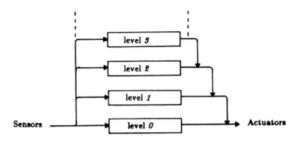

the subsumption architecture on which the robot operated decomposed the problem into layers of behaviour, each one operating simultaneously and in parallel:

The behaviours themselves are not separate from “perception” and “action”; they overlap with subsets of both. At its most basic level, the robot avoids collisions, even when still. If someone approaches a stationary robot, it will back away. This lowest level behaviour can operate in isolation from the rest.

When you add a second level of behaviour called wander, the robot will wander about in random directions. This behaviour “subsumes” or inhibits the lower-level “avoid collision” behaviour. But critically, if an object appears in its path, then “avoid collisions” kicks in again and the robot will pause or back off for a moment. Adding a third level of behaviour where the robot explores by picking a distant point in the room and heading for it will subsume the two lower layers, which can still take over when necessary.

When you add a second level of behaviour called wander, the robot will wander about in random directions. This behaviour “subsumes” or inhibits the lower-level “avoid collision” behaviour. But critically, if an object appears in its path, then “avoid collisions” kicks in again and the robot will pause or back off for a moment. Adding a third level of behaviour where the robot explores by picking a distant point in the room and heading for it will subsume the two lower layers, which can still take over when necessary.

The end result of the blending of these three very simple behaviours is a robot that appears to explore its environment in a highly intelligent manner. It has no map and no 3D model, but it’s robust in a chaotic and unpredictable environment. Its behaviour is “animal-like”.

It gets better. We can then imagine evolution gradually layering these behaviours on top of each other to create more and more intelligent animals. The architecture suggests how our higher order faculties “subsume” our reptilian brain (at least when we’re sober). Even damaging a particular behaviour leaves the robot able to function to some degree, unlike a typical “programmed” robot that can fail if you change even a single byte.

Brooks built the robot out of a wired up network of Finite State Machines, simple modules that operated in parallel and implemented the behaviours. This meant the robot was cheap, whereas competing autonomous devices such as Shakey required huge amounts of processing power to operate.

It is hard today to gauge the reaction to this paper. In his books (“Cambrian Intelligence” is the one to read) Brooks portrays himself as a heretic, lobbing hand grenades at a community of apoplectic straight-laced researchers. It’s also possible that they simply ignored him. A long out-of-print 1997 documentary called “Fast, Cheap and Out of Control“, named after one of his papers, lumps Brooks in with a gaggle of eccentrics such as lion tamers and mole rat photographers. The Amazon review for the documentary describes him as “a real wacko” rather than an MIT professor (although the two are perhaps not incompatible).

https://www.youtube.com/watch?v=jPbdwsZh38o

The core insight, that an observer may attribute representations and mechanisms to a creature that had nothing to do with what’s really going on, was made elegantly and concisely two years earlier by

The core insight, that an observer may attribute representations and mechanisms to a creature that had nothing to do with what’s really going on, was made elegantly and concisely two years earlier by Valentino Braitenberg in his superb little book “Vehicles: Experiments in Synthetic Psychology“. In it, the neurobiologist and violinist posits thought experiments about a series of extremely simple robots made from nothing more than sensors, motors and a few wires. He shows how by progressively adding more components we could build robots that appear to show characteristics such as aggressiveness or cowardice. These are of course the interpretations of an observer who can make all kinds of elaborate guesses on how the robots work; the actual mechanisms are extremely simple. It is easier to design sophisticated creatures than it is to reverse engineer existing ones. If you buy one book after reading this, make it Vehicles.

Valentino Braitenberg in his superb little book “Vehicles: Experiments in Synthetic Psychology“. In it, the neurobiologist and violinist posits thought experiments about a series of extremely simple robots made from nothing more than sensors, motors and a few wires. He shows how by progressively adding more components we could build robots that appear to show characteristics such as aggressiveness or cowardice. These are of course the interpretations of an observer who can make all kinds of elaborate guesses on how the robots work; the actual mechanisms are extremely simple. It is easier to design sophisticated creatures than it is to reverse engineer existing ones. If you buy one book after reading this, make it Vehicles.

What distinguished Braintenberg and Brooks, the Wallace and Darwin of embodied robotics, from the AI mainstream was their focus on the body and low-level mechanisms instead of abstract high-level reasoning. We tend to see what we know and programmers see the world in terms of symbols and procedural processes. They would assume that the way to tackle navigation is to first extract a 3D model from a video stream and then process it. Engineers and biologists on the other hand see the world in terms of physical entities. They would look at the mechanics, the physical characteristics of neurons and how they’re wired together.

Brooks meanwhile, was on a roll. An insect-like robot called Genghis with realistic gaits followed in 1988. He designed My Real Baby, a lifelike robotic doll for Hasbro. He founded iRobot, still the world’s leading supplier of robotic vacuum cleaners (they’ve produced 16 million of them), all built using the subsumption architecture. He even helped design the Mars lander for Sojourner in 1997, although NASA reined in his wilder plans for sending a swarm of “fast, cheap and out of control” robots to the red planet. Not bad for “a wacko”.

His work inspired the more eccentric Mark Tilden, a hobbyist fond of dressing like a cowboy and building animal-like robots out of scrap electronics. These were always simple, generally with analogue circuitry and heavily inspired by Brooks and Baitenberg. As Brooks was championing building robots with little computational power, Tilden wondered, “Well how about practically none?” His philosophy of “BEAM” (Biology Electronics Aesthetics Mechanics) robotics is a bit nebulous, including a moral imperative to use recycled electronic junk rather than new components, but it has supplied a much better “three laws of robotics” than Asimov’s tedious and over-cited set. Tilden’s Laws are 1. A robot must protect its existence at all costs, 2. A robot must obtain and maintain access to a power source, 3. A robot must continually search for better power sources. More concisely:

- Protect thy ass

- Feed thy ass

- Move thy ass to better real estate

Just like the imaginary robots in “Vehicles”, his robots show surprisingly sophisticated behaviour generated with very simple circuitry. This has made them extremely popular with toy companies. In sheer numbers, Tilden is perhaps the world’s most successful robot builder, surpassing even Brooks. His “toy” robots have sold more than 22 million units throughout the world (making him more successful in those terms than iRobot) yet his work is little known or discussed in mainstream robotics. He appears to prefer life on the edge.

Just like the imaginary robots in “Vehicles”, his robots show surprisingly sophisticated behaviour generated with very simple circuitry. This has made them extremely popular with toy companies. In sheer numbers, Tilden is perhaps the world’s most successful robot builder, surpassing even Brooks. His “toy” robots have sold more than 22 million units throughout the world (making him more successful in those terms than iRobot) yet his work is little known or discussed in mainstream robotics. He appears to prefer life on the edge.

For Brooks himself, by 1993 doubt appeared to be creeping in. In his early papers the path to follow had seemed clear: work your way up the evolutionary chain. But instead of continuing to build up step by step to more and more sophisticated “animal” robots by layering on new behaviours, he and his students decided to jump directly to humanoids.  Cog and Kismet modelled certain aspects of human behaviour such as social interaction but were not really extensions of the earlier machines. Brooks and his team had ignored their own advice and tried to skip the lower orders to build a complete system. In his 2002 book “Flesh and Machines“, Brooks wrote:

Cog and Kismet modelled certain aspects of human behaviour such as social interaction but were not really extensions of the earlier machines. Brooks and his team had ignored their own advice and tried to skip the lower orders to build a complete system. In his 2002 book “Flesh and Machines“, Brooks wrote:

“I had been thinking that after completing the current round of insect robots, perhaps we should move on to reptile-like robots. Then perhaps a small mammal, then a larger mammal and finally a primate. At that rate, given that time was flowing on for me, I might be remembered as the guy who built the best artificial cat. Somehow that did not quite fit my self-image.”

I wonder what he thinks of that statement today. The obvious riposte is that once you know how to build a functionally complete artificial cat then you probably understand 90% of how human intelligence works. Perhaps all our thought, our languages and culture are generated by a few thin layers of behaviour on top of a vast animal core. What would Brooks have achieved had he continued down his original path?

Meanwhile, conventional programmers continued to plug away at their higher-level symbolic representations. In 2006 a Silicon Valley-based robotics company called Willow Garage created ROS, an open source robotic software framework that quickly became the industry standard. The revival of another forgotten technology, neural networks (rebranded as “deep learning”) was now radically out-performing traditional perceptual methods in computer vision. A robot could finally look at a video stream and extract a symbolic representation. It could create 2D and 3D maps of the world. This was classic AI with more CPU plus a few new tricks and it could perform real world tasks. The industry exploded and the subsumption architecture became a footnote in history.

At a recent robotics conference I collared a programmer who works with Brooks today at Rethink. “Whatever happened to the subsumption architecture?” I asked him. “It doesn’t scale,” was the terse reply. It could get to a certain level of sophistication in performing tasks, but apparently no further.

SLAM-based, “conventional AI” robot vacuum cleaners from NEATO outperform iRobot’s Roombas. Baxter, the flagship industrial robot of Brook’s new company Rethink Robotics, runs on ROS.

https://www.youtube.com/watch?v=fCML42boO8c

In the SWSX interview, Brooks sounds weary. Beyond the inanity of the interviewer’s questions, he seems depressed by the lack of progress along the lines he had originally envisioned. Gone is the swaggering revolutionary of the 1980s and in his place is a slightly grouchy elder statesman trying to lower the expectations of young enthusiasts. Being the most prominent and successful roboticist in the world isn’t enough; we measure ourselves by what we expected to achieve, not by what we actually did.

And yet… The new wave of deep learning based AIs show highly specialised faculties, not general intelligence. The ROS-driven humanoids in the DARPA challenge can barely take a step, vainly attempting to calculate the required position of every single joint and motor in real time. None of these robots feel natural. Maybe more CPU and the latest tricks have their limits.

In the shadows of academia, a few researchers continue to plough Brook’s field, working on biologically inspired robots where much of the “processing” is performed by the body itself. Unlike the DARPA robots, spring-driven “passive walkers” can stroll down a hill without electronics or even power, stepping naturally over obstacles. Soft robotics and artificial spinal cords are driving some bizarre creatures that are crawling out of the labs.

In the shadows of academia, a few researchers continue to plough Brook’s field, working on biologically inspired robots where much of the “processing” is performed by the body itself. Unlike the DARPA robots, spring-driven “passive walkers” can stroll down a hill without electronics or even power, stepping naturally over obstacles. Soft robotics and artificial spinal cords are driving some bizarre creatures that are crawling out of the labs.

Brooks inspired all of this. He may have to be content to being the Marx not the Lenin of the revolution, but embodied robotics, with layers of loosely coupled behaviours, may just be the architecture of the future. His finite state machines and LISP code are dead but the insights behind them are driving synthetic turtles, lampreys, salamanders, octopi and humanoids, those elusive artificial human companions.

Brooks inspired all of this. He may have to be content to being the Marx not the Lenin of the revolution, but embodied robotics, with layers of loosely coupled behaviours, may just be the architecture of the future. His finite state machines and LISP code are dead but the insights behind them are driving synthetic turtles, lampreys, salamanders, octopi and humanoids, those elusive artificial human companions.